About

I received my Master’s in Robotics from the University of Maryland, College Park, and my Bachelor's in Mechanical Engineering from Vellore Institute of Technology.

I work on trustworthy perception systems that operate under uncertainty in safety-critical domains such as medical imaging and robotics. My research focuses on uncertainty-aware deep learning, explainable computer vision, and multi-modal perception, with an emphasis on architectures that are both theoretically grounded and deployable on real-world systems ranging from clinical pipelines to embedded robotic platforms.

Teaching Assistant

University of Maryland, College Park

ENPM 808A: Introduction to Machine Learning

INST 452: Health Data Analytics

Professional Experience

Instacart

Machine Learning Intern

InterDigital Inc

Research and Innovation Intern

Spyne.ai

Computer Vision Engineer

Publications

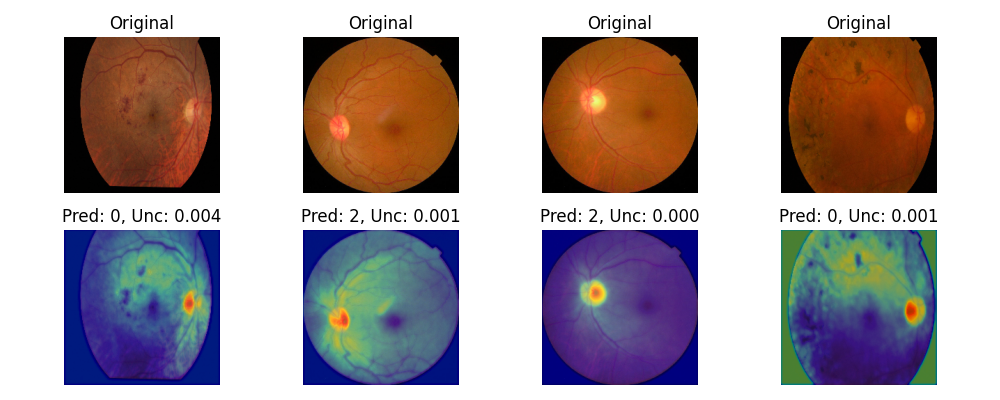

BUCAN: Bayesian Uncertainty-aware Classification with Attention Networks for Medical Images

AAAI 2026 Bridge Program

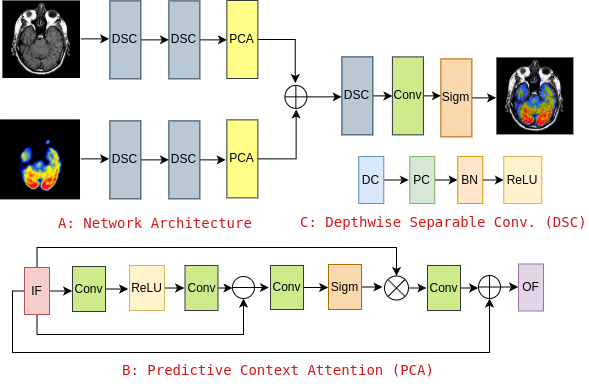

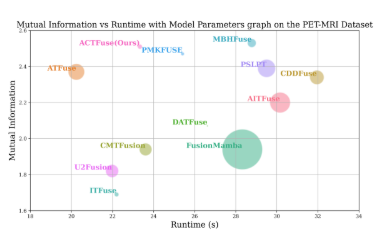

LightFusionNet: Lightweight Dual-Stream Network with Predictive Context Attention for Efficient Medical Image Fusion

AAAI 2026 Bridge Program

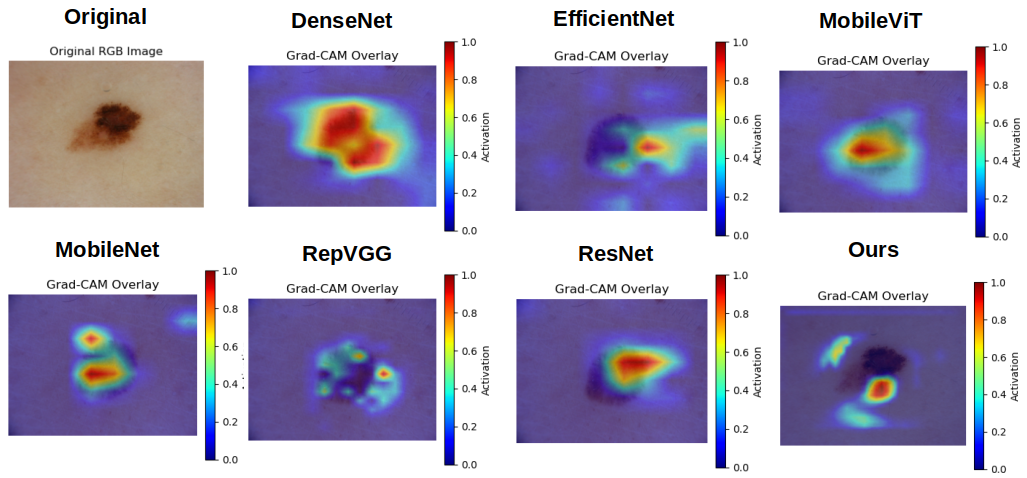

BASIC: Bayesian Spiral Attention Classifier for Interpretable Medical Image Classification

Preprint

TAFIE: Transformer-Assisted Fusion with Integrated Entropy Attention for Multimodal Medical Imaging

AAAI 2026 Bridge Program

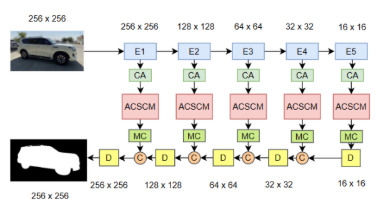

CRAUM-Net: Contextual Recursive Attention with Uncertainty Modeling for Salient Object Detection

Preprint

MAFFuse: Multi-Attention Fusion Network for Efficient and Robust Image Fusion

Preprint

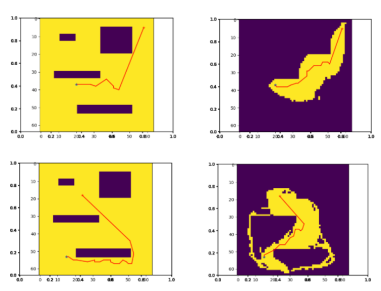

CBAGAN-RRT: Convolutional Block Attention Generative Adversarial Network for Sampling-Based Path Planning

CVIP 2025

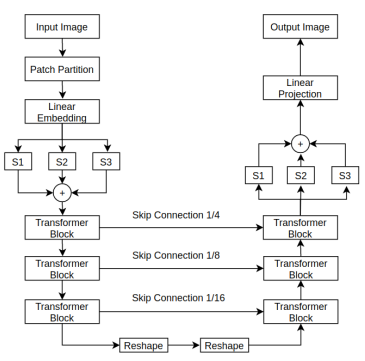

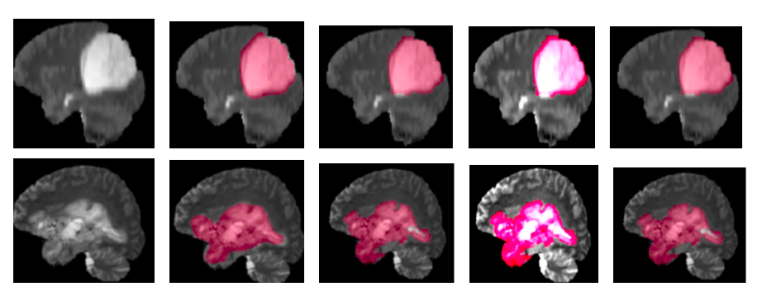

ViTBIS: Vision Transformer for Biomedical Image Segmentation

MICCAI 2021 Workshop

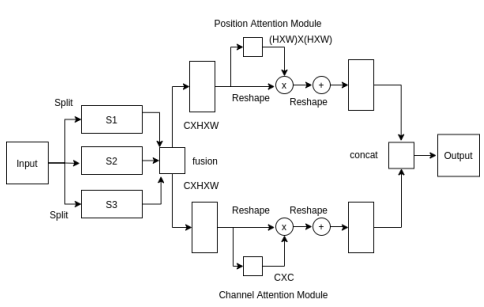

Dual Multi-Scale Attention Network

ICIAP 2022

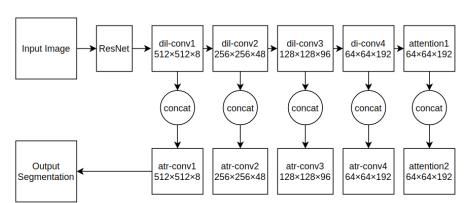

Semantic Segmentation with Multi Scale Spatial Attention for Self Driving Cars

ICCV 2021 Workshop

Uncertainty Quantification using Variational Inference for Biomedical Image Segmentation

WACV 2022 Workshop

Professional Service

Reviewer

AAAI 2026 Bridge Program on AI for Medicine and Healthcare

AISTATS 2026

AAAI 2026

WACV 2026

NeurIPS 2025 Ethics Reviewer

ICCV 2025 Workshop on Computer Vision for Biometrics, Identity & Behavior

ICCV 2025 Workshop on Distillation of Foundation Models for Autonomous Driving

ICCV 2025 Workshop on the Challenge Of Out Of Label Hazards In Autonomous Driving

ICCV 2025 Workshop on Computer Vision for Automated Medical Diagnosis

WACV 2024

CVPR 2023 Workshop on Multimodal Learning and Applications